We’re now well into our Macrophonics Open Source creative development project at Legs on the Wall in Sydney. Lots of experimentation going on at this stage and we’ll start to resolve things down during the week before our stint in the theatre next week. We’ve opened out the exploration to sounding objects – driving audio signals into snare drums, cymbals and other resonant objects to set them off acoustically. The idea is that certain parts of the stage area contain assemblages of resonant objects and, through video and hardware sensing, performers will be able to activate the array of objects within an auditory ‘scene’ that we create. These auditory scenes will contain flexible sonic ‘modules’ – collections of sounds and musical motifs that can be recombined freely.

At the moment, we’re experimenting to find the resonant frequency of each of the trial objects. We also want to get a sense of how the objects behave with different input signals. At the moment, we’ve set up the input to the ‘actuators’ to run from discrete sends so that they can be blended/balanced with the signals from the main loudspeakers. From a control perspective, we will compare wearable sensors (using the Lilypad Arduino platform) located on the performer, with video tracking from above the stage. The wearable sensors have the advantage of being robust in respect of different lighting states, but need to be protected from damage by the physical theatre performer. This could prove challenging. The video tracking approach is robust from a physical point of view, but highly light dependant – so changing light conditions can affect the threshold settings for the tracking patches so that tracking becomes less reliable through changing light states.

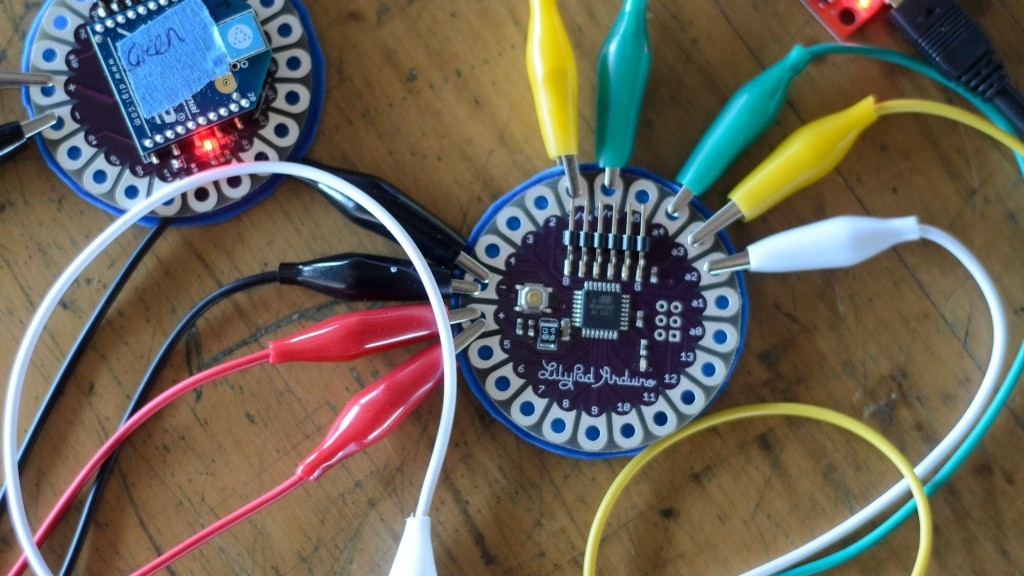

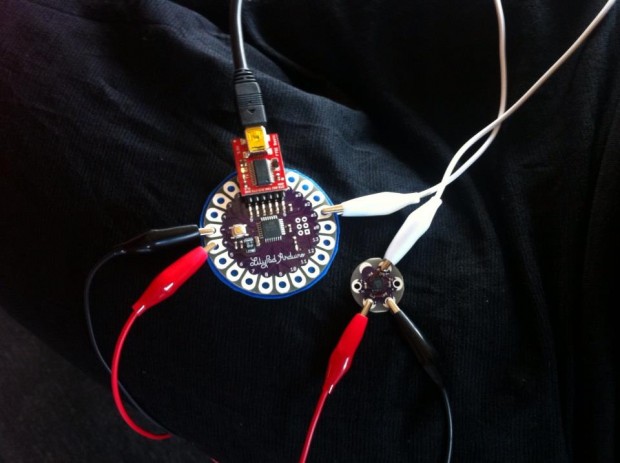

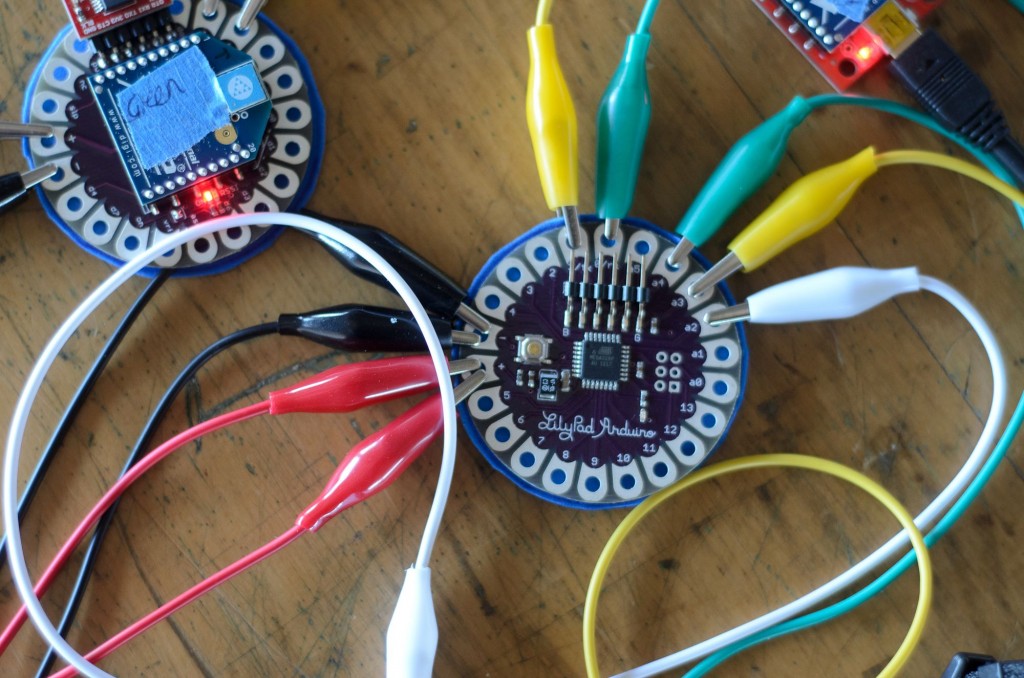

The plan is to use the accelerometers on the wrists of the performers so that arm movements and rotations will output X,Y,Z co-ordinates. We’ll also be trying out light sensors on the performers, flex sensors and some heat sensors. The Lilypad is a great platform for wearable computing – the main issue is the small number of analog inputs, so we will need to investigate the potential to have multiple Lilypads sending data over wifi (via the Xbee platform) to a single computer. If this poses an issue for us, we could mount a second Lilypad/Xbee set up and have it transmitting to a second receiver at a second computer. As we have not yet established an approach to data mapping and distribution to the media performers, this second model may actually be more ergonomic. We shall see.

In the shot above you can see the Lilypad Arduino connected to a Lilypad Xbee board which takes care of the wireless communication of sensor data to an Xbee receiver at the computer. The Lilypad Xbee is currently getting power from a USB cable, but will soon be powered by an AAA battery. Once all the sensors have been connected up and prototyped in this way, the whole thing will be sewn into a garment and the sensors will be connected to the board via conductive thread.