We’ve just completed some video documentation of our mediatized performance work ‘Ghost Ships’, which we shot in the studios at Macquarie University in Sydney.

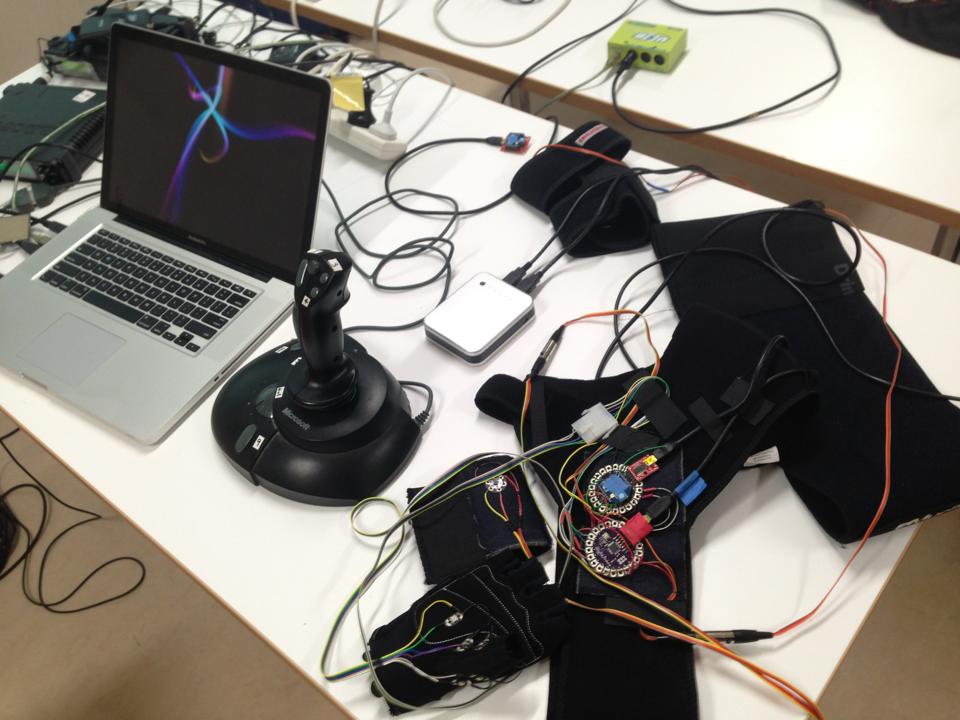

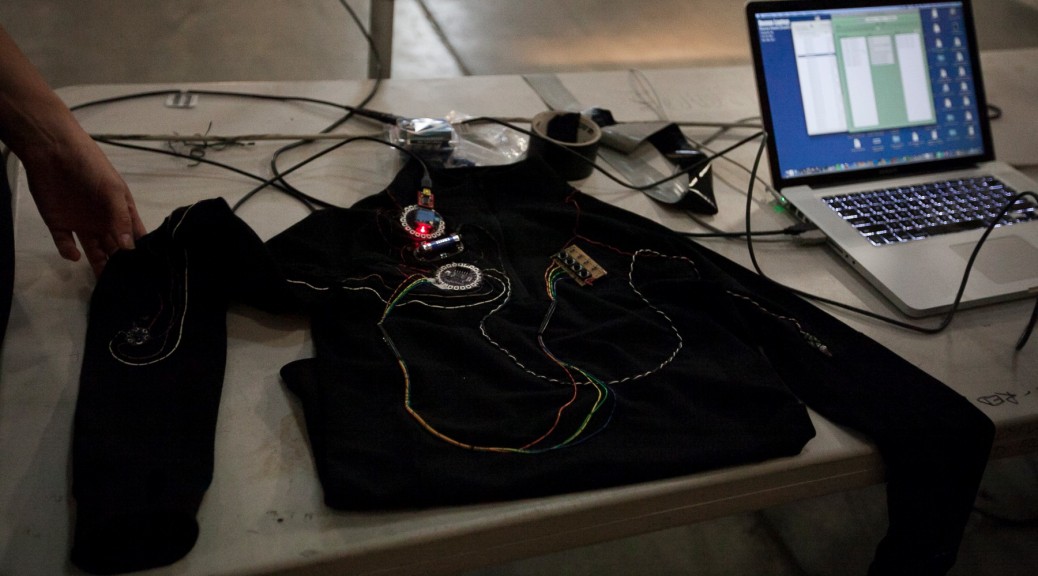

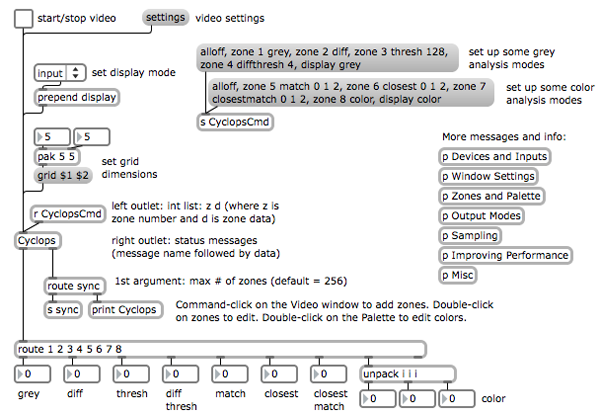

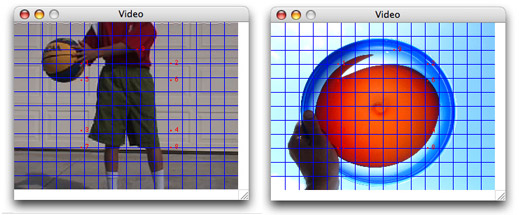

‘Ghost Ships’ is a work for wireless wearable performance interface and intelligent lighting systems. The work uses of an unencumbered wireless performance interface to drive all media elements via free air gestures from a single on stage performer. Through the use of a wearable microcontroller and sensor system designed for the project by the artists, a single performer is able to play the entire theatrical space (light, sound, video) through arm and hand gestures. This includes an intelligent moving light system that moves theatrical lights and changes light parameters in response to the performer’s gestures.

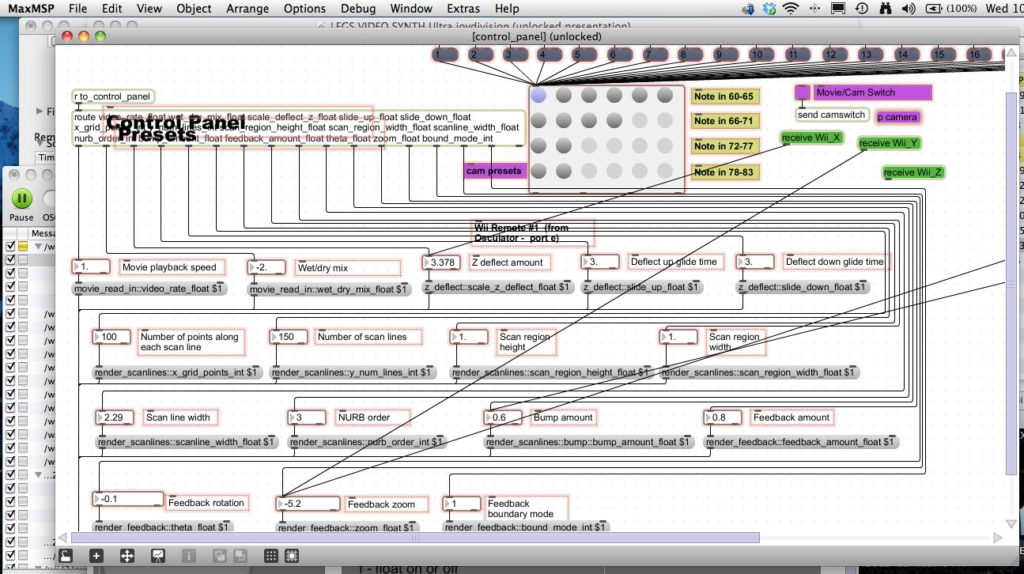

This is an evolving prototype of an interface we’ve been working on for a couple of years now. You can read about some of the R&D work here.

Software/media programming: Julian Knowles

Wearable interface design and additional programming: Donna Hewitt

Music: Julian Knowles

Performance improvisations: Donna Hewitt