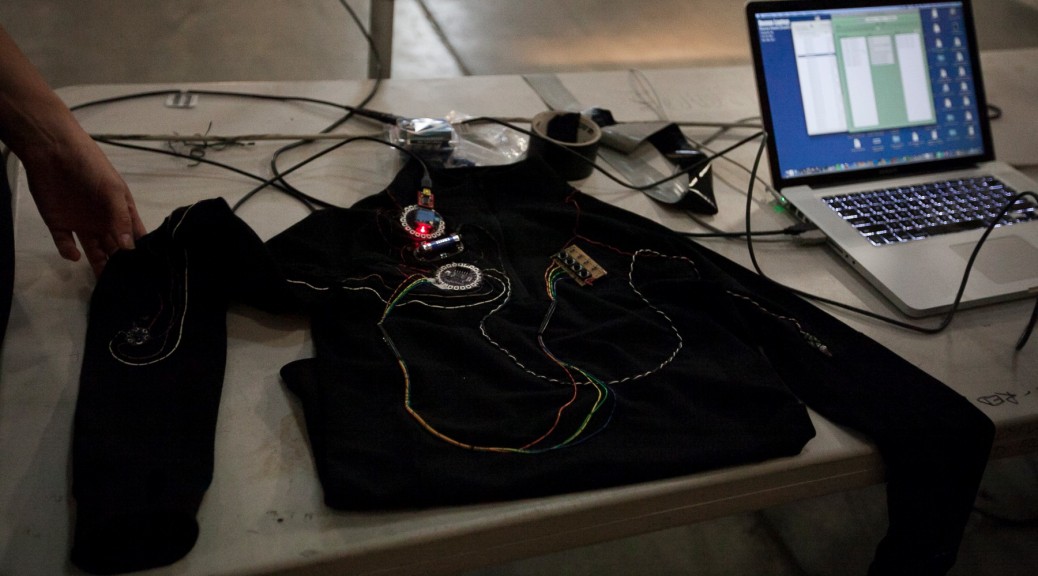

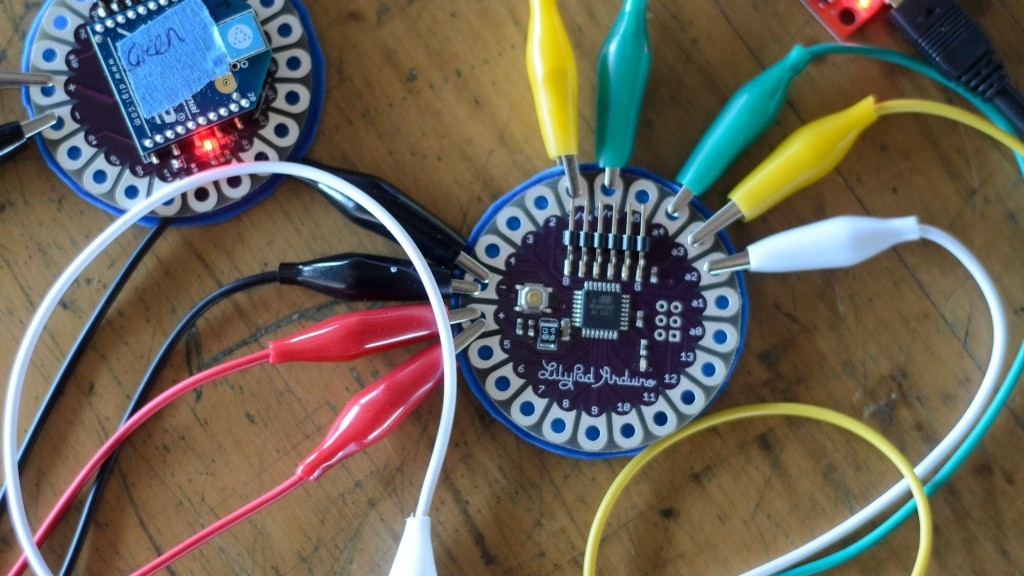

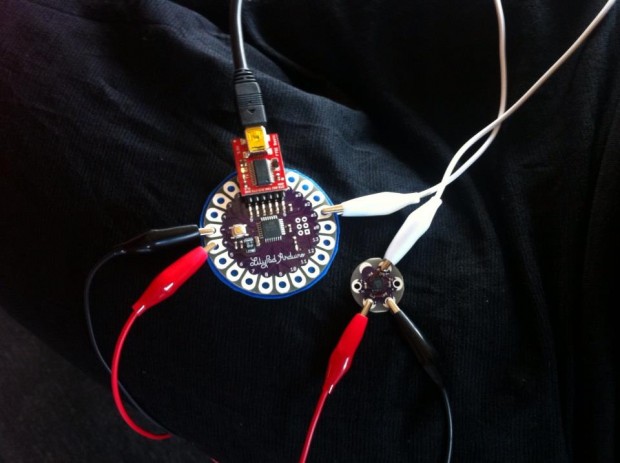

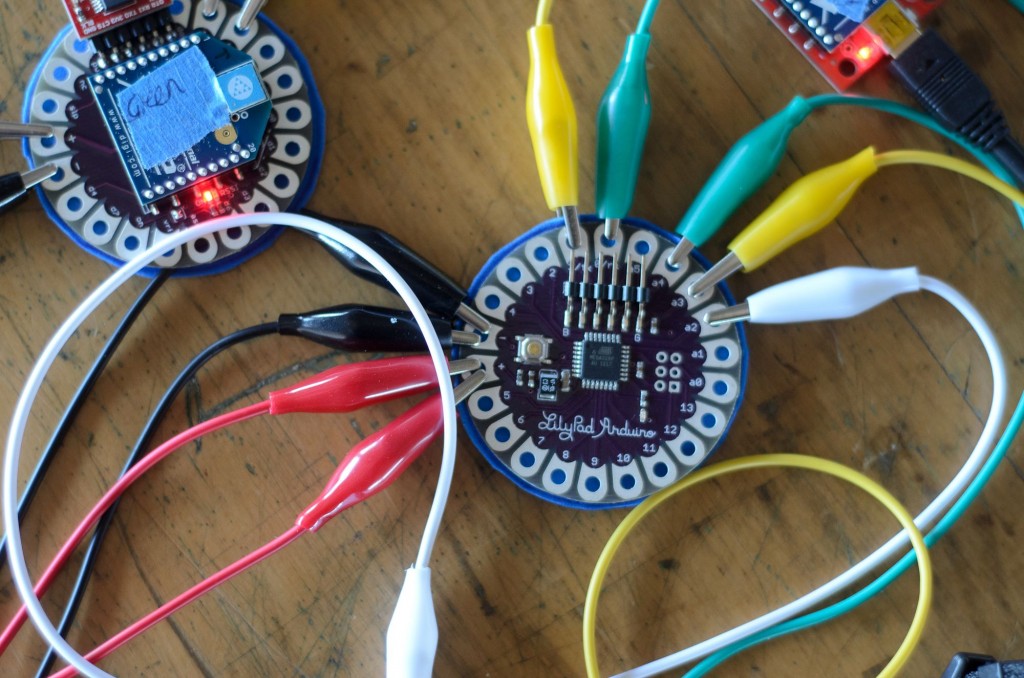

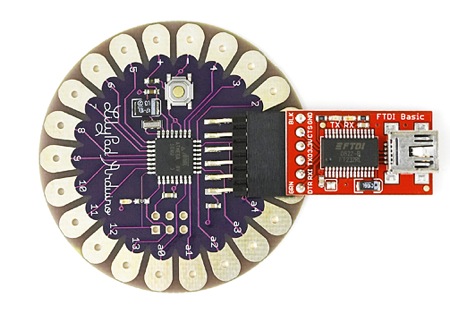

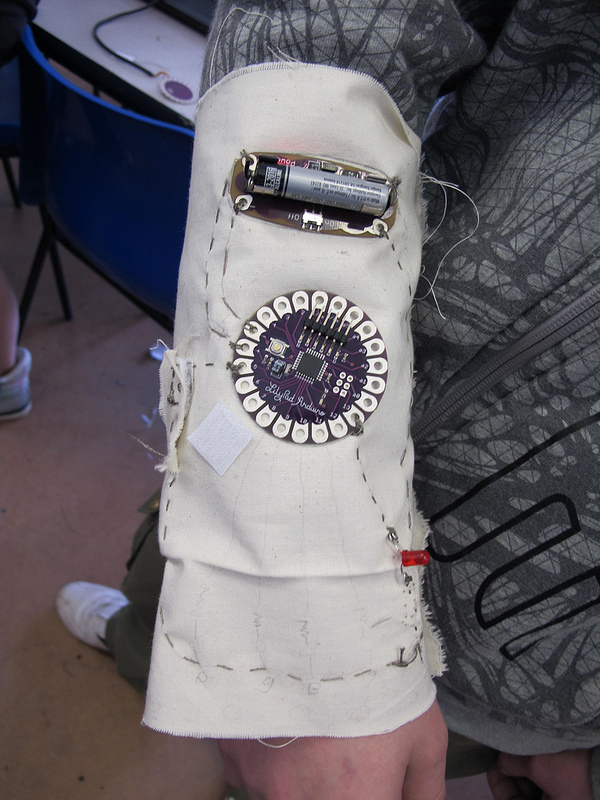

The accelerometer and wearable work progressed smoothly, without significant hitches. Donna’s wearable was completed in the final week. A few issues are worth mentioning here. She found the use of conductive thread and ribbon to be somewhat problematic. Great care needed to be exercised to prevent thread touching and shorting out (which reset the boards) and the high resistance also posed some issues. This caused Donna to make a decision to replace most of the conductive thread with conventional insulated hookup wire. Whilst initially concerned about appearance, the hookup wire actually looked great. Take a look.

A second issue was the total power draw of the sensors and Xbee wireless system. Donna found that the single AA battery could not supply enough power to run the system properly. With insufficient time to source and fit a higher capacity battery system before the public showing of our work, she decided to power the system from a USB cable and make the necessary modifications to the battery system following the residency.

To everyone’s surprise, when we connected the wearable to our test audio and video patches for the first time, the results were beyond our expectations. The wearable was highly responsive, ‘playable’ and Donna reported that she felt a fine sense of control over the media. She felt immersed in the media and the interface was highly intuitive, providing a rich set of possibilities for gestural control. Here is a video showing the initial hookup of the wearable interface to a test audio patch with the triaxial accelerometer and flex sensor mapped to audio filter parameters.

Macrophonics – first wearable trial

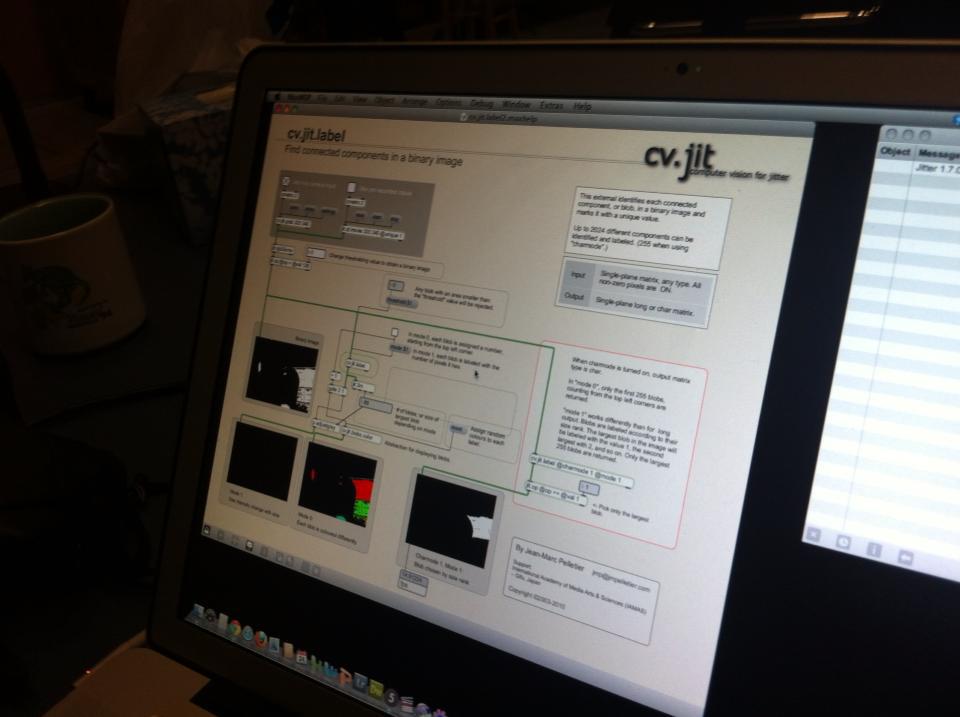

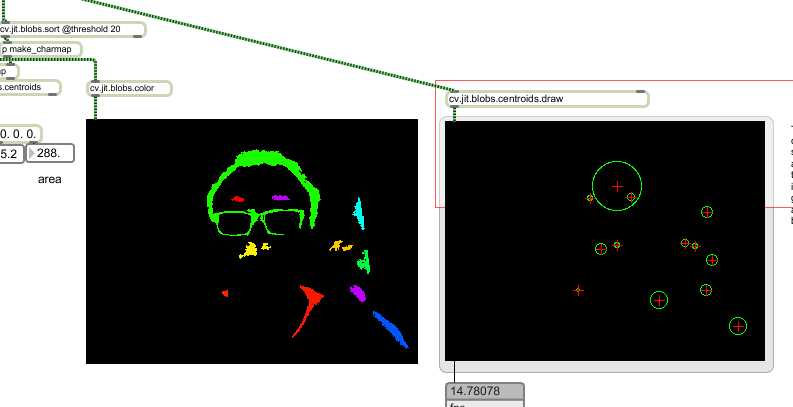

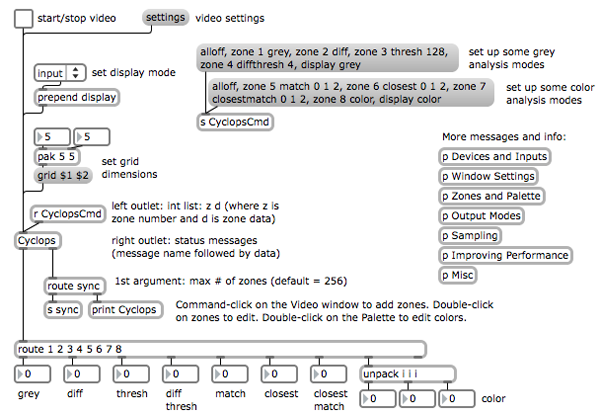

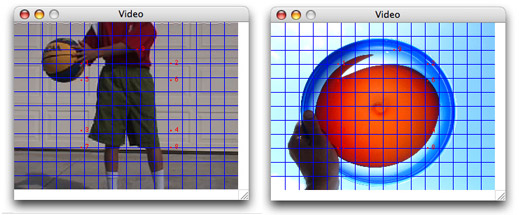

In the final week we experimented with a range of sensing techniques that picked up the position of performers on a stage. We experimented with the cv.jit and with Cyclops systems within MaxMSP. Both proved to be excellent. However given that they are video tracking systems they are inherently light dependent and so their output and behaviours are fundamentally affected by changing light states and conditions.

All of this is manageable with precise light control and programming (or the use of infra red cameras), however in the absence of more sophisticated lighting and camera resources, we decided to use ultrasonic range finders on the stage to locate the position of performers.

Two of these devices were placed on either side of the stage, outputting a stream of continuous controller data on the basis of the performer(s) proximity to the devices. These sensors were connected to Tim’s computer via an Arduino Uno. This allowed us to have a simple proximity sensing system on stage. The system was robust in respect of light, but suffered from occasional random noise/jitter that would last for a few seconds without obvious cause. This meant we had to apply heavy signal conditioning to the source data in Max/MSP to smooth out these ‘errors’ which in turn resulted in a significant amount of latency. Given these constraints, we used these sensors to drive events which did not have critical timing dependencies and could ramp in and out more gradually.

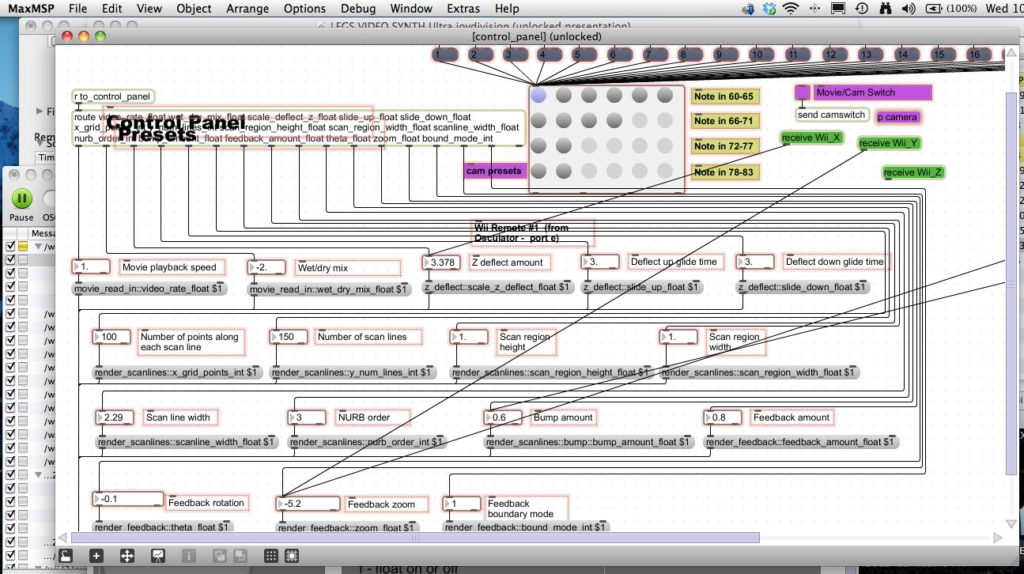

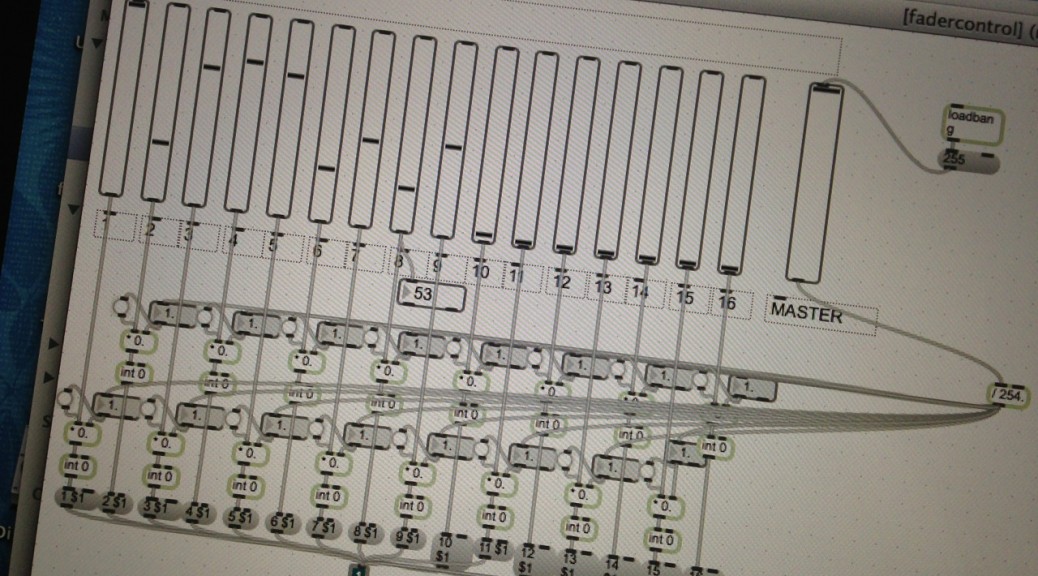

Julian spent a number of days in the final week programming the relationships between the sensing systems and the video elements, working with ‘retro’ 70s scanline/raster style video synth processing and time domain manipulations of quicktime movies. Julian’s computer also operated as a kind of ‘data central’, with all incoming sensor data coming in to my computer and from there being mapped/directed out to the other computers from a central patch in MaxMSP+Jitter.